Sharing is caring, how to make the most of your GPUs? part 1, Time-sharing

2024-07-02 in RHOAI, PSAP, WorkToday, my teammate Carlos Camacho published a blog post that continues the work I started on the performance valuation of time-sharing in NVIDIA GPUs:

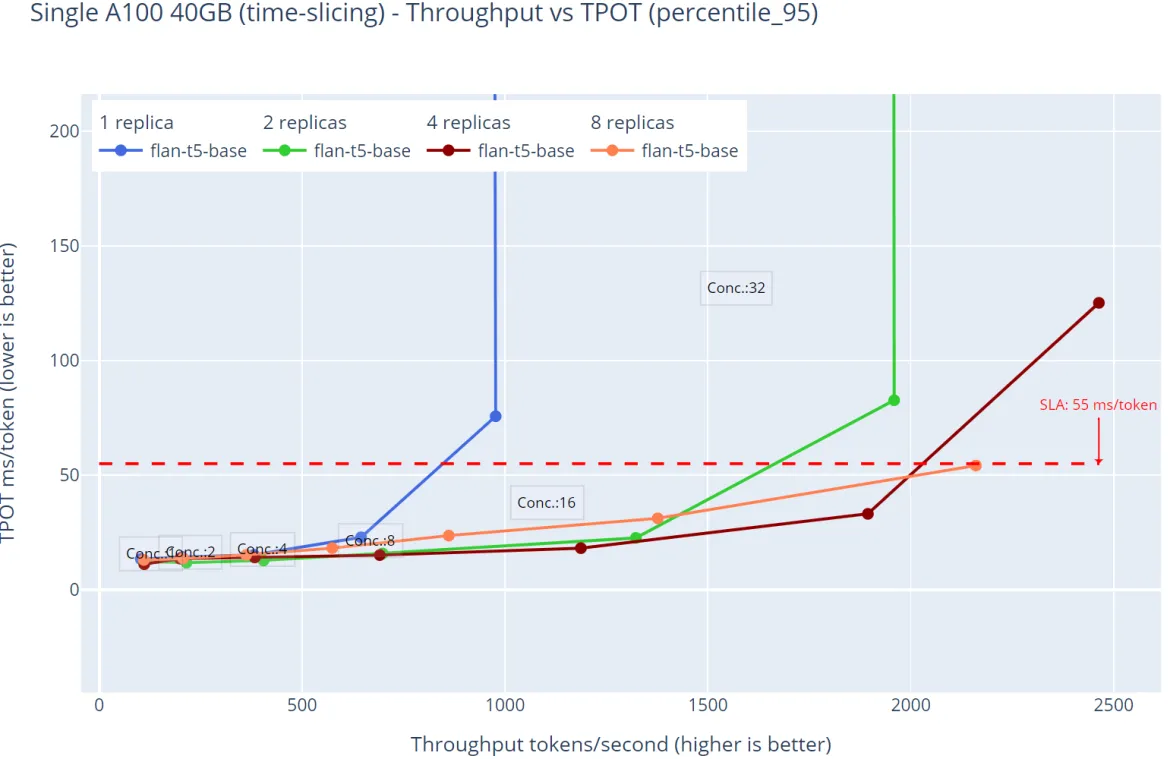

GPU oversubscription is like “carpooling” for your GPU – you’re getting more people (processes) into the same car (GPU) to use it more efficiently. This approach helps you get more throughput, keeping the overall system latency under specific service level agreements (SLAs), and reducing the time the resources are not used. Of course, there can be some traffic jams (too many processes racing for resources), but with the right strategies, and the understanding of your workloads, you can keep the systems consistently outperforming.